Azure CosmosDB (Azure Cosmos DB – Globally Distributed Database Service (formerly DocumentDB) | Microsoft Azure) is a super set of the service once known as “Azure Document Db”. In short: “Azure CosmosDB ” = “Azure Document Db” + new data types + new APIs.

You can try CosmosDB for free on Azure or you can setup the CosmosDB on your local environment by following my previous blog. I am becoming a fan of .NET Core with all the features and it is getting better day by day . In this blog post i just wanted to take that initial steps of how to work with CosmosDB from .NET Core Client context. After reading this blog, you should be able to do the following with CosmosDB programmatically,

- Create Database

- Create Collection

- Create Documents

- Query a Document

- Delete Database

Pre-Requisities Needed:

I have the following in my local environment , hope you guys have already have😊, if not start setting up.

- Windows 10 OS

- Azure CosmosDB Emulator

- Visual Studio Code editor with C# plugin

- .NET Core 2.0

Ok folks, lets get started.

Step 1: Create .Net Core Console Application : As other tutorials, to make it simple I will be creating a dotnetcore console app to work with CosmosDB . With Net Core , we now have a CLI. Lets create the new app with the following steps. (I’ve mentioned in the previous blog)

- Open command prompt or poweshell (Administrator Mode)

- Navigate to your folder where you need to create the app

- Execute the following command

dotnet new console -n CosmosCoreClient -o CosmosCoreClient

here -n denotes the name of the application, and -o tells the CLI to create a folder with that name and create the application inside the folder

Open the newly created project in Visual Studio Code. Execute the following command

Code.

Here is a screenshot of how it should look on your end:

I am using C# 7.1 feature to create a async Main method in my console app. For that, we will need to make a small change in our project file a little. Open CosmosDBClient.csproj file to edit. Add the following XML node to PropertyGroup node.

<LangVersion>latest</LangVersion>

After changes, your csproj file should look like below:

Lets move to the core part of integrating CosmosDB with .netCore application and start building the features.

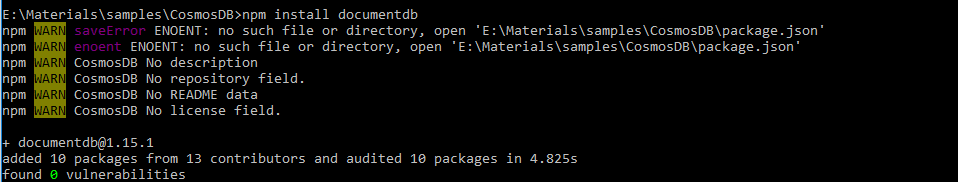

Step 2: Add CosmosDB Nuget Package

If you have followed the above steps, we have successfully created the application, next is to add reference to CosmosDB nuget package to get the client libraries. Advantage of these packages/libraries are, they make it easy to work with Cosmosdb.

- Open a command prompt and navigate to root of your project.

- Execute the following command

dotnet add package Microsoft.Azure.DocumentDB.Core

You might wonder the namespace has DocumentDB in it. In fact DocumetDB is where the whole journey started and hence the name sticks in Cosmos world too. If you now look at the project file a new reference for DocumentDB would have been added. Here is the screenshot of my project file.

Step 3: Creating Model for CosmosDB

Lets build the database. If you are new to CosmosDB you should know that CosmosDB has a query playground here https://www.documentdb.com/sql/demo. It is a sandboxed environment with couple of databases and you can try around with different queries you can write against the database. For this post, lets create the database named Course locally.

Since we our application is to deal with the Courses we need 4 Models here.

- Course

- Session

- Teacher

- Student

Here are the Models of the above 4.

Course.cs

using Microsoft.Azure.Documents;

using Newtonsoft.Json;

using System;

using System.Collections.Generic;

public class Course : Document

{

[JsonProperty(PropertyName = "CourseId")]

public Guid CourseId { get; set; }

[JsonProperty(PropertyName = "Name")]

public string Name

{

get

{

return GetPropertyValue<string>("Name");

}

set

{

SetPropertyValue("Name", value);

}

}

[JsonProperty(PropertyName = "Sessions")]

public List<Session> Sessions { get; set; }

[JsonProperty(PropertyName = "Teacher")]

public Teacher Teacher { get; set; }

[JsonProperty(PropertyName = "Students")]

public List<Student> Students { get; set; }

}

Session.cs

using System;

public class Session

{

public Guid SessionId { get; set; }

public string Name { get; set; }

public int MaterialsCount { get; set; }

}

Teacher.cs

using System;

public class Teacher

{

public Guid TeacherId { get; set; }

public string FullName { get; set; }

public int Age { get; set; }

}

Student.cs

using System;

public class Student

{

public Guid StudentId { get; set; }

public string FullName { get; set; }

}

Lets create the Client as the next step.

Step 4: Creating the Client

Next step you will need to instantiate the CosmosDb client before we do anything with the database. In order to connect to the local instance of the cosmosDb, we need to configure 2 things,

- URL of the CosmosDb instane

- Authentication key needed to authenticate.

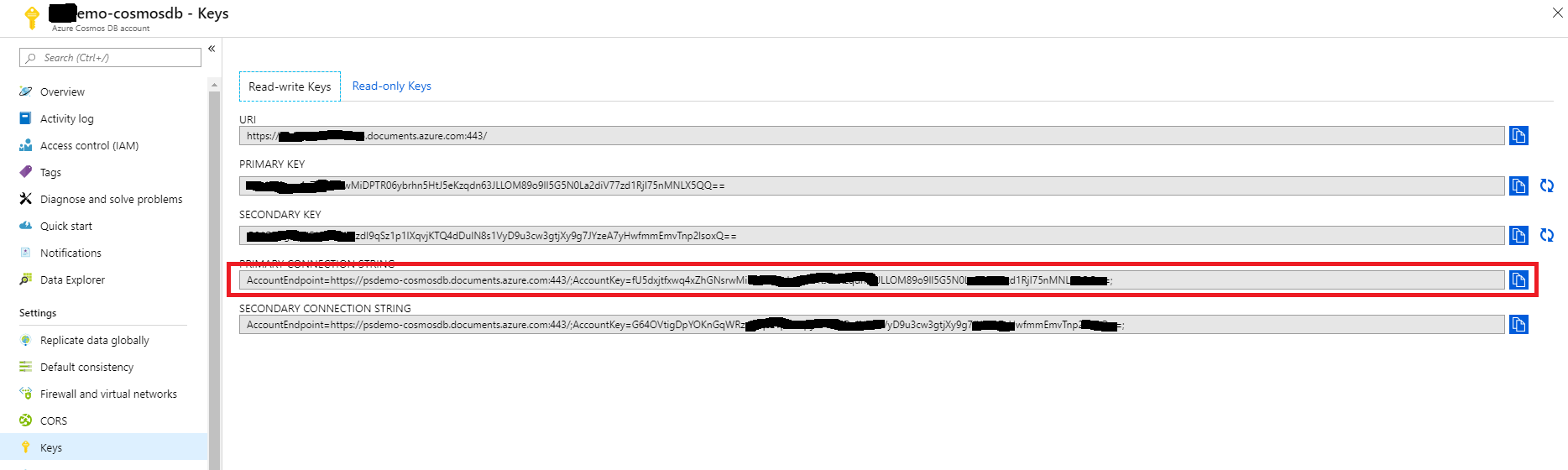

As stated above, When you start the CosmosDb local emulator, the db instance is available at https://localhost:8081. The authkey for local emulator is a static key and you can find it here in this article(https://docs.microsoft.com/en-us/azure/cosmos-db/local-emulator#authenticating-requests). This key works only with the local emulator and wont work with your Azure instance, you can find the key if you are using azure instance from the portal as mentioned in the answer. Here is the code snippet to instantiate the client:

static string endpointUri = "https://localhost:8081";

static string authKey = "C2y6yDjf5/R+ob0N8A7Cgv30VRDJIWEHLM+4QDU5DE2nQ9nDuVTqobD4b8mGGyPMbIZnqyMsEcaGQy67XIw/Jw==";

string dbName = "CourseDB";

string collectionName = "Courses";

static void Main(string[] args)

{

Console.WriteLine("Press any key to run");

Console.ReadLine();

Run();

Console.ReadLine();

}

private static async void Run()

{

DocumentClient documentClient = new DocumentClient(new Uri(endpointUri),

authKey);

}

When the method Run is exectued the Client is instantiated with the local CosmosDB emulator.

Step 5: Lets start building the features

Next step is to build the features as listed above. Lets add the methods inside the Async method.

Creating Database:

To create a new database programmatically, we make use of CreateDatabaseAsync() or CreateDatabaseIfNotExistsAsync(). When creating the database we pass the database name. Here is the code snippet:

private static async Task<Database> CreateDatabase(DocumentClient documentClient)

{

Database database = documentClient.CreateDatabaseQuery().Where(c => c.Id == "courseDatabase").AsEnumerable().FirstOrDefault();

if (database == null)

{

database = await documentClient.CreateDatabaseAsync(new Database()

{

Id = "courseDatabase"

});

}

return database;

}

When you refresh the URL of local CosmosDB emulator, You should see the database created in your local db emulator as follows,

Creating Collection:

Once the database is created, we can then create a collection. We make use of CreateDocumentCollectionAsync() or CreateDocumentCollectionIfNotExistsAsync().

We will need to provide what is known as the database link (basically the URI at which the db can be reached) and the collection name to the create method. Here is the code snippet.

private static async Task<DocumentCollection> CreateDocumentCollection(DocumentClient documentClient, Database database)

{

DocumentCollection documentCollection = documentClient.CreateDocumentCollectionQuery(database.CollectionsLink).Where(c => c.Id == "courseDocumentCollection").AsEnumerable().FirstOrDefault();

if (documentCollection == null)

{

documentCollection = await documentClient.CreateDocumentCollectionAsync(database.SelfLink, new DocumentCollection()

{

Id = "courseDocumentCollection"

});

}

return documentCollection;

}

Now you should the the Collection for Course is created as follows,

Creating Document : After creating the database and collection, we can now create the documents. We make use of CreateDocumentAsync() for this purpose. We will need to pass the URI of the collection under which we want to create the document and the document data itself. In this example we make use of the Course data mode i showed earlier and pass it to the create method. Here is the code snippet:

private static async Task CreateCourse(DocumentClient documentClient, DocumentCollection documentCollection)

{

Course course = new Course()

{

CourseId = Guid.NewGuid(),

Name = "En",

Teacher = new Teacher()

{

TeacherId = Guid.NewGuid(),

FullName = "Scott Hanselman",

Age = 44

},

Students = new List<Student>()

{

new Student(){

FullName = "Trump",

StudentId = Guid.NewGuid()

}

},

Sessions = new List<Session>(){

new Session(){

SessionId = Guid.NewGuid(),

Name = "CosmosDB",

MaterialsCount = 10

},

new Session(){

SessionId = Guid.NewGuid(),

Name = "Ch1",

MaterialsCount = 3

}

}

};

Document document = await documentClient.CreateDocumentAsync(documentCollection.DocumentsLink, course);

}

You should see the document inserted in localdb Emulator as follows.

Querying Document:

Now that we have created a document, we can see how to query it. We can make use of CreateDocumentQuery() method for this purpose. We will need to pass the collection link on which we need to query. We can then build the query as a LINQ expression and the client library does the rest. This is the best part of the client library. It has the ability to translate your LINQ expression to cosmos REST URIs without me having to crack my head in constructing those URIs. Here is the code snippet:

private Course QueryCourse(Guid guid, String dbName, DocumentClient documentClient, string collectionName)

{

Course selectedCourse = documentClient.CreateDocumentQuery<Course>(

UriFactory.CreateDocumentCollectionUri(dbName, collectionName))

.Where(v => v.Name == "CosmosDB")

.AsEnumerable()

.FirstOrDefault();

return selectedCourse;

}

Note that you will need to import System.Linq for the LINQ expression to work.

Deleting Database:

Finally, we can make use of DeleteDatabaseAsync() method to delete the database programmatically. We will need to provide the database link to the delete method. We can use the UriFactory.CreateDatabaseUri() helper method to create the database link. Here is the code snippet:

await documentClient.DeleteDatabaseAsync(UriFactory.CreateDatabaseUri(dbName));

Well, those are the main features that Azure CosmosDB client provides and if you are stuck with any of the steps above , you can check out the repository i have added with the samples.

Happy Coding! Lets spread Azure's CosmosDB to the world.

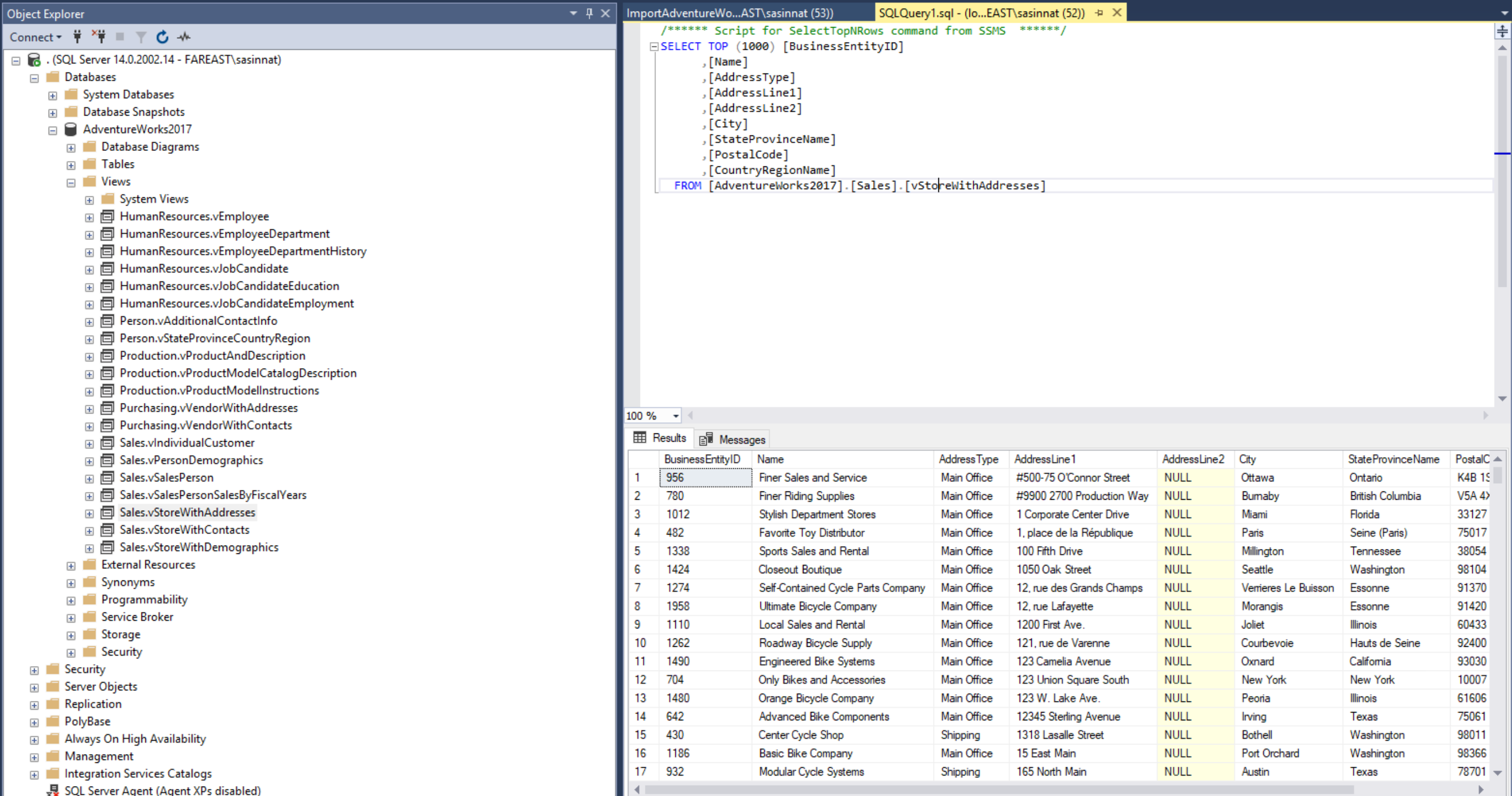

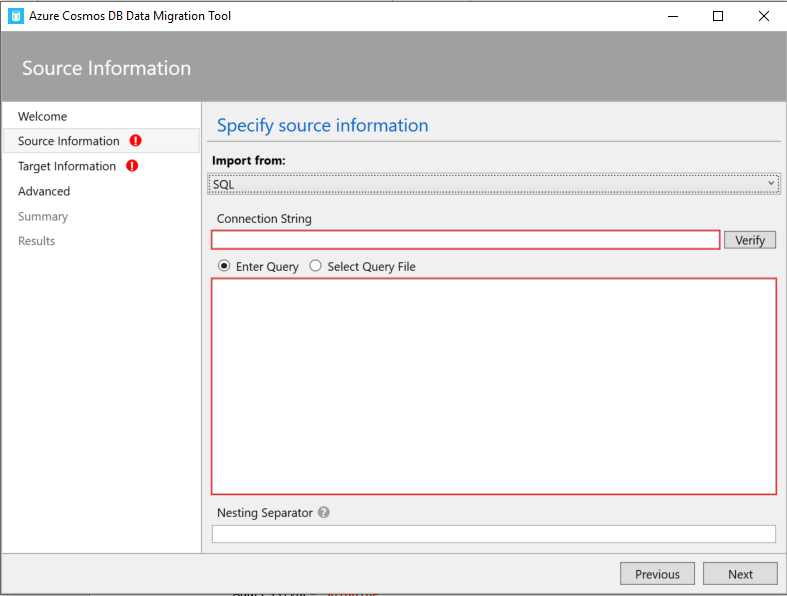

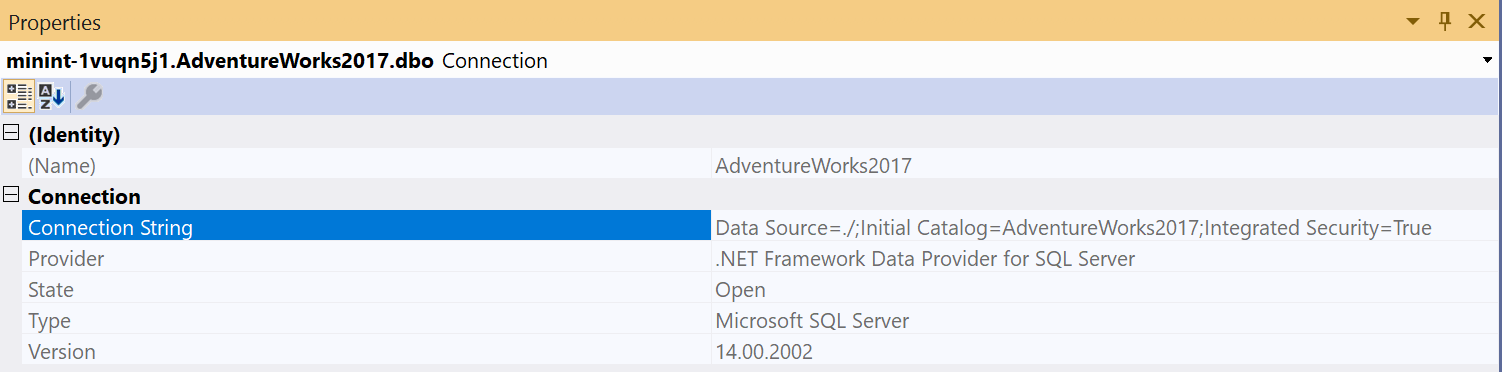

Once you enter the connection string verify if its working by clicking on verify.

Once you enter the connection string verify if its working by clicking on verify.

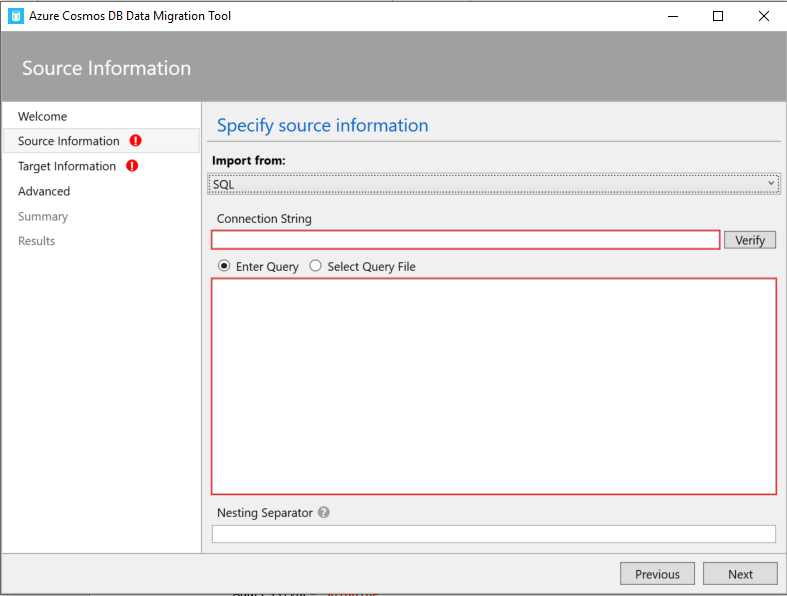

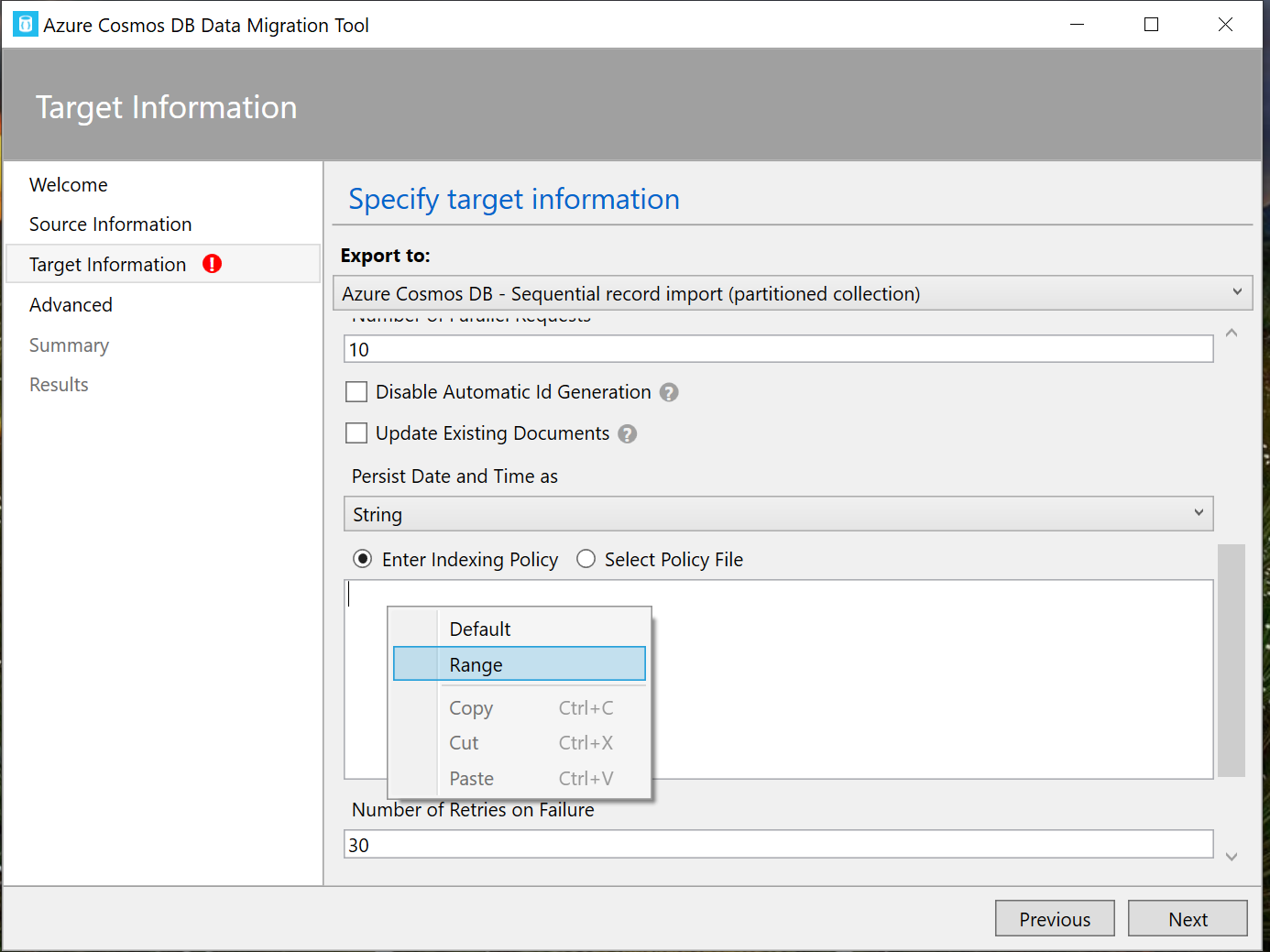

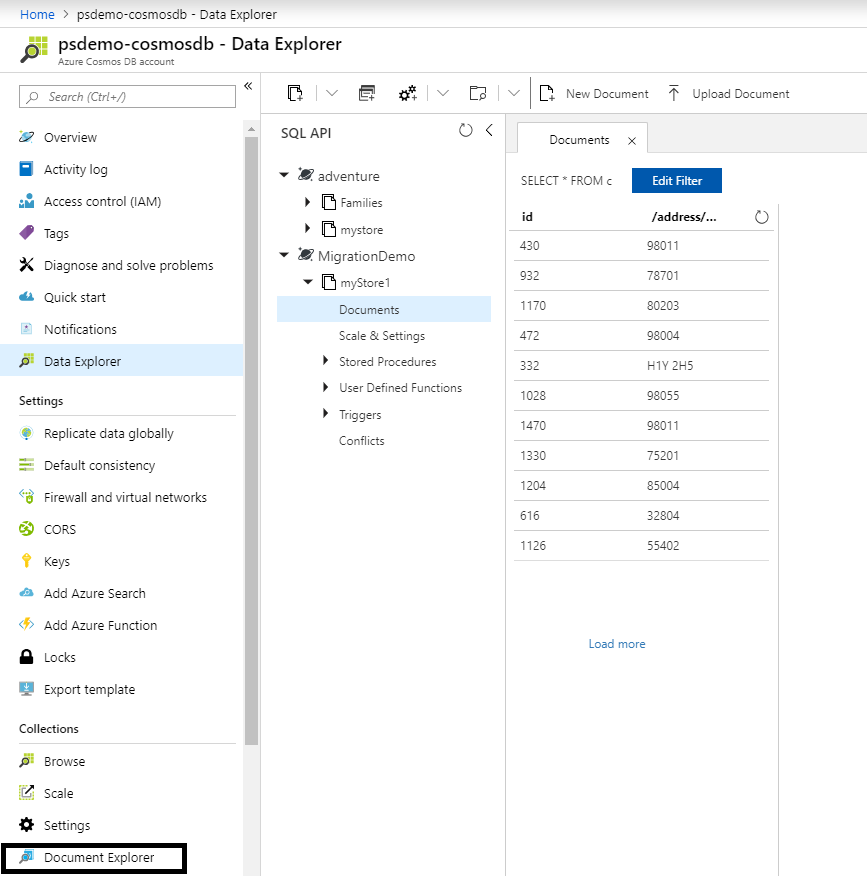

Once you copy paste the connection string, one more extra thing you need to do is to append the database with the connections string https://gist.github.com/sajeetharan/1e6e21339820b8437b1d9542e8133d51#file-sajeetharan-com\_4192016\_mssql\_cosmosdb You can verify the connection string by clicking on verify button. Also give the collection name as you prefer. It is important to define a PartitionKey in order to query the data later.Partition key is used to group multiple documents together within physical partitions. Let's partition by “/address/postalCode” which we're storing as postal code nested beneath address and for throughput, we'll just go with the default here of a thousand request units per second. One more thing we need to set the indexing policy. You'll notice this large text box here where you can set the indexing policy.I want to choose the range indexing policy which you can do here is by right clicking inside the text box and selecting from the context menu.

Once you copy paste the connection string, one more extra thing you need to do is to append the database with the connections string https://gist.github.com/sajeetharan/1e6e21339820b8437b1d9542e8133d51#file-sajeetharan-com\_4192016\_mssql\_cosmosdb You can verify the connection string by clicking on verify button. Also give the collection name as you prefer. It is important to define a PartitionKey in order to query the data later.Partition key is used to group multiple documents together within physical partitions. Let's partition by “/address/postalCode” which we're storing as postal code nested beneath address and for throughput, we'll just go with the default here of a thousand request units per second. One more thing we need to set the indexing policy. You'll notice this large text box here where you can set the indexing policy.I want to choose the range indexing policy which you can do here is by right clicking inside the text box and selecting from the context menu.  Just click Next and you will be taken to the summary page, where you can review the Migration steps at once.

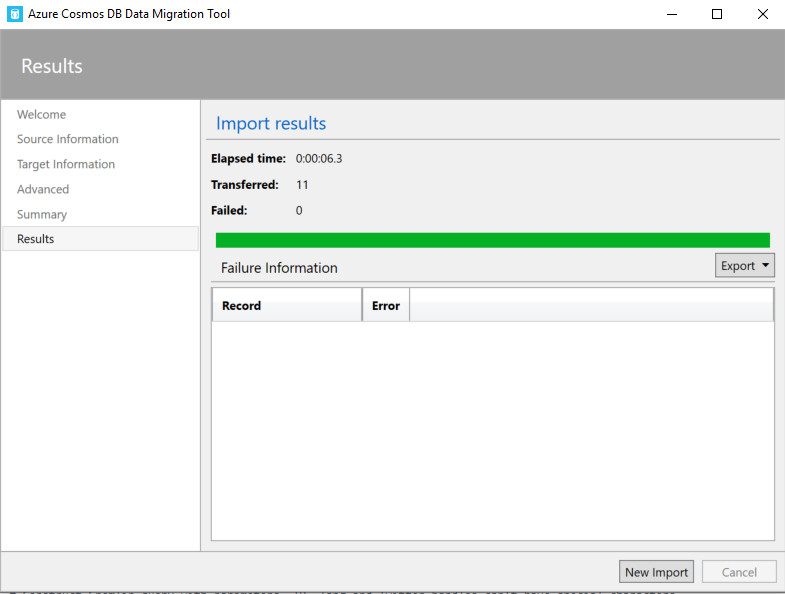

Just click Next and you will be taken to the summary page, where you can review the Migration steps at once.

That's how you migrate data from SQL server to Cosmosdb and it is very easy using the Cosmosdb Migration tool. For the other modes of data migration i will be writing separate blogs. Hope this blog helps if someone wants to transform data and migrate the data from MSSQL to cosmosdb. Cheers!

That's how you migrate data from SQL server to Cosmosdb and it is very easy using the Cosmosdb Migration tool. For the other modes of data migration i will be writing separate blogs. Hope this blog helps if someone wants to transform data and migrate the data from MSSQL to cosmosdb. Cheers!